How do you make sure that your service contains the latest content and design? Here's how MOJ Digital teams are tackling the challenge.

Stopping the ‘cut and shut’ approach to projects

We’ve all seen examples of ‘cut and shut’ digital projects. These involve running the build and development work independently of the content and user experience before bolting the elements together at the end.

The results are rarely pretty.

Luckily, the MOJ Digital content team is a pretty experienced bunch (among other achievements we delivered 4 exemplar services last year). We're using what we've learnt to make sure development works hand-in-hand with content and design.

Start off in the right way

The discovery phase of any project is the ideal time to focus on identifying key content and interaction issues. You should:

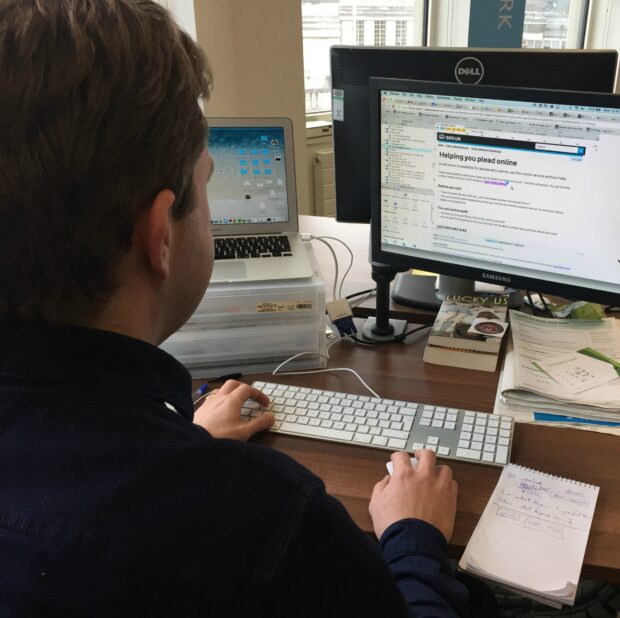

1. Get content, research and design working together ASAP

As Ben Terrett, ex-design director at Government Digital Service said, ‘words are as much the interface as design’. Your content person, designer and researcher are all responsible for the user experience so make sure they're working together up front.

2. Go as deep into your content and design as quickly as you can

Get your content, research and design team deep-diving into the content. The more they learn about users, the quicker they can ask important questions and make structural decisions.

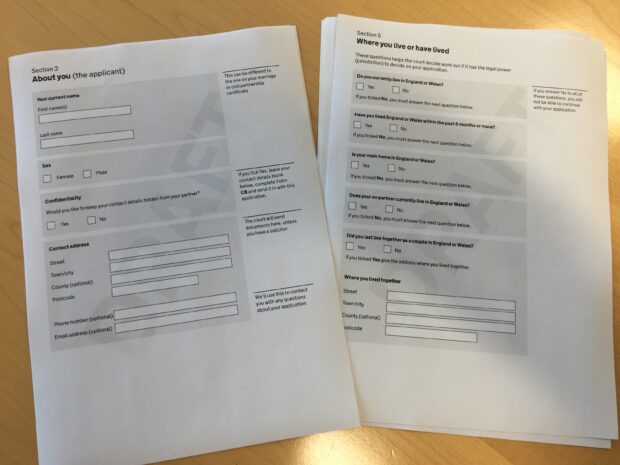

3. Test high-fidelity content with low-fidelity technology

Focus on detailed content with the lowest-fidelity technology available (like paper) to understand user problems and test how to answer them. This will save you building components later that are no longer needed.

Moving on to build

Your research, content and design will want to test numerous options but your coders can’t (and shouldn’t) be keeping pace.

This can lead to a growing gap which then cause issues with implementing the latest versions of content and design. Our teams have worked in different ways to solve this challenge.

Use a detailed prototype

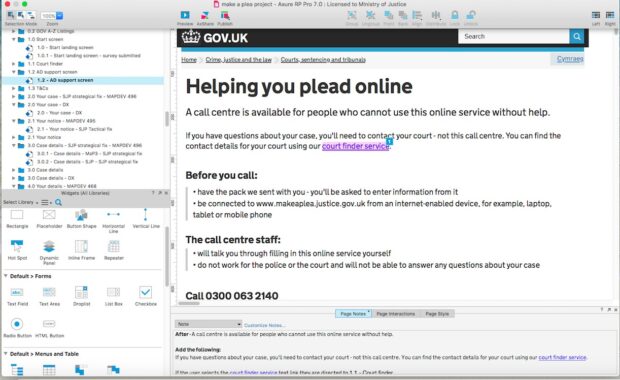

One of our teams maintains a high-fidelity prototype of the service using a tool called Axure (other prototyping tools are available).

The designer set up everything up initially, ensuring that screens mimics the look and feel of the service, and now uses it to show and test interactions.

It also offers an environment where the content designer works with the content ‘in situ’. This means that the content designer can see how the content fits with the design and make as many changes as necessary directly into it.

When the latest interactions and content versions have been tested with users, this version of the tool is released to the developers who update their work accordingly.

It works for remote teams because screens can only be checked out by one team member at a time.

Build with content in mind

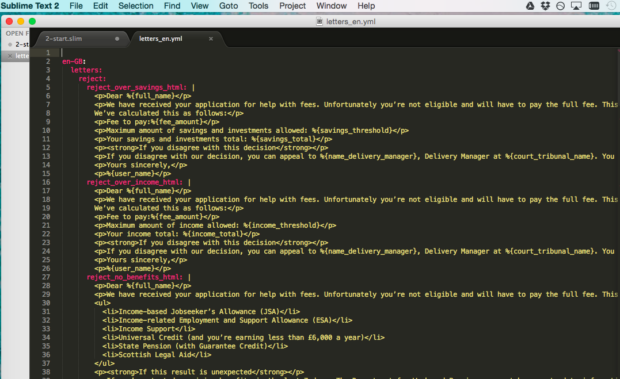

Other teams have adopted a different approach. They’ve collaborated with the developers who have build the application in such a way that enables content designers to work with the code.

This has meant that the developers keep the majority of content in one place and write automated tests that don’t break every time the copy changes.

The teams have also discovered that it’s helpful if content files are created using basic html formats such as Markdown or Slim. Doing this means that they’re easy to edit and update using a basic text editor like Sublime.

Setting up the project in this way means that content designers can then take some of the burden from developers by making small copy changes directly into the application’s source code.

How do you do things?

We're always keen to learn and improve so why not tell us how you're working by leaving a comment below.

Don't miss out on future blog posts: sign up for email alerts.

3 comments

Comment by Peter Jordan posted on

All good advice, but surprised you've not mentioned quant data and analysis. Even at the discovery stage there is usually data available to analyse from legacy/off line services and search analytics is an important input to content design - to understand users' language.

And when you are in beta and then live, you'll have data from users who are 'actually using' the service. Analysing their behaviour will identify issues for user researchers to explore. Vice versa, you can explore the data to see if issues identified by researchers are replicated at volume.

Comment by Martin Oliver posted on

Hi Peter

Good to hear from you and many thanks for commenting.

You make a number of great points. We absolutely agree that analytics play a vital role in developing user insights and guiding approaches. We'd also echo your thought that building in phases enables teams to track ‘real’ behaviours and then adapt their work accordingly.

However, while analytics are really important, I was keen to address a slightly different topic in this post. As many departments are now building services, we wanted to share our experience of how to structure teams and operate in a way that enables content to be integral rather than bolted on at the end.

Hopefully, getting things set up correctly in the first place means that delivery can happen more quickly. And that means we should be able to write more posts about analytics and user insights in the future.

Comment by Peter Jordan posted on

Hi Martin

Apologies for continuing a conversation in comments! I'm picking up on your point about avoiding content being bolted on at the end and heartily agree. And that was my point too, really. There's a risk that data and analysis are bolted on at the end too, when 'there's something to measure', rather than having an analyst engaged in sprints from a much earlier stage.