Service standards are vital for making sure the services we’re building are high quality, value for money and meet user needs. But how they are measured needs to change.

For a while now we’ve been finding the Service Standard assessment process pretty painful.

The usual approach is a four hour assessment panel at the end of each delivery phase (alpha, beta, live).

However this wasn’t working, service teams were stopping delivery for up to a fortnight to prepare for what felt like an exam. Often this involves last-minute cramming and causes the whole team a lot of stress.

Then, of course, there are the times when services don’t pass the assessment, at which point they have to spend weeks - sometimes months - making improvements to their service before returning for reassessment.

So we’ve set about trying to change it!

Understanding the problems

We started by asking Delivery and Product Managers what they felt the biggest pain points are in the assessment process.

They highlighted 3 key issues with the existing process:

- It really disrupts delivery (up to 2 weeks lost for each assessment)

- It’s anti-agile

- Panel decisions can be inconsistent

Those with experience as assessors felt the model didn’t give panelists much insight into the team’s ways of working, and that a lot of the context of the service was often lost or misunderstood.

Despite these negative experiences, everyone still agrees with the principle of having service standards but how they’re measured needs to change.

Out with the old and in with ‘Continuous Service Review’

So we decided to try something new; something we’re calling ‘Continuous Service Review’.

A Product or Delivery Manager from another service team acts as a Peer Reviewer. They sit in on sprint reviews (usually an hour a fortnight) and guide the team towards the Service Standard, challenging them where they don’t think they’re meeting it and seeking advice from specialists where needed.

Rather than booking an assessment weeks in advance and disrupting delivery to prepare for it the Peer Reviewer and service team agree when the service is ready to go into the next phase.

At this point they’ll have a short Service Review session (one to two hours) with representatives of the different specialist areas (technical, design and user research), including at least one person who hasn’t been involved in the continuous review so far, to discuss the service in more detail and provide recommendations for the next phase.

Our journey here

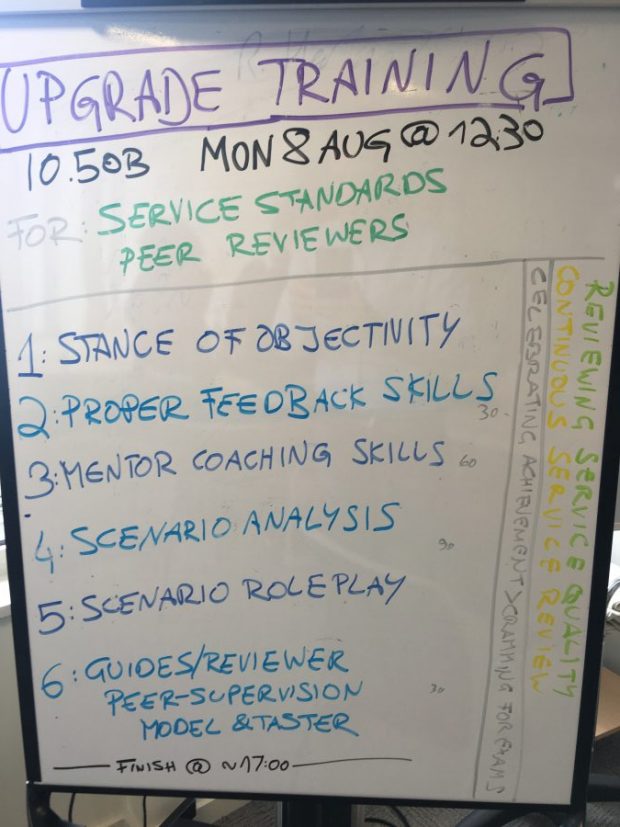

To get to this point we’ve trained all of our PMs and DMs on the Service Standard and we’ve trained those interested in becoming Peer Reviewers in useful skills such as giving feedback, being objective, how to apply the Service Standard to different types of service, and mentor coaching.

We’ve set up monthly Peer Supervision sessions for us to get together to support each other, share our experiences and celebrate successes - making sure we have a consistent approach across all services and to continuously improve this new process.

Early results

So far we’ve taken two services through an alpha review using this new method and both passed. In both cases delivery was not disrupted in advance of the review session so that’s an immediate win with the new process.

One of the DMs said she had been in four previous assessments and this was by far the most positive experience; feeling more like a conversation than an interrogation. We’re now working with eight services across MoJ Digital & Technology and our agencies, at alpha and beta, and hope to roll out our new approach wider soon.

Lessons learnt

Our new approach isn’t without its issues. We’re aware that while it saves the service teams time it has in some cases become quite burdensome for the Peer Reviewer - particularly when working with a less experienced team who require more support than just the sprint review.

We hope to mitigate this by each team having a Peer Reviewer so that while a team loses some time with their PM or DM, they gain time with another to help them meet the standard.

We’ll be monitoring this and will need to weigh it against any benefits to make sure the balance is right.

What next?

We’re working with GDS on their Service Standard Discovery work to learn from each other’s experiments and hopefully come to an agreement on a new approach.

We’re the first department to be making such significant changes to the way we assess products internally so it’s a great opportunity for us to influence others and pave the way for a better quality review process for government services.

3 comments

Comment by Jonathan posted on

This is brilliant stuff. Really looking forward to how this evolves in the future!

Comment by Darren posted on

Having gone through a rather torturous Beta assessment earlier this year (which, in the end, we absolutely blitzed) I think this is a great idea. Arguably, if you're developing with the Service Standard in mind, you should have little to do to prepare for the Assessment but we all know that's not true; in my team's case, we'd had changes of personnel and of systems (Google to O365) and so there were evidential points which had to be re-created, and we were working with a Service Manager who didn't understand digital/agile and had been burned by a previous Assessment... I'd be really interested to know how you get on with this!

Comment by Luke posted on

Agreed, there is still a way to go. I'm currently going through the process. I've found it leaves those reviewing in the dark, especially if they are new to the process. Being remote makes sense in theory but I suggest mixing in a kick-off meeting as standard - to put names to faces and have initial questions answered.