While GDS has been working on improving what we assess our digital services against, here at MOJ Digital we’ve been rethinking how to approach our service assessments.

Our experience is that assessing services at the end of each product phase encourages all of the negatives of ‘waterfall gates’. Effort is focused towards one end point with any failure coming too late to correct the product.

To avoid this risk, we’re adapting our approach to make sure service assessments act as a corrective tool throughout the development cycle by encouraging constant failure and quick learning.

Continuous assessment with the PQ Team

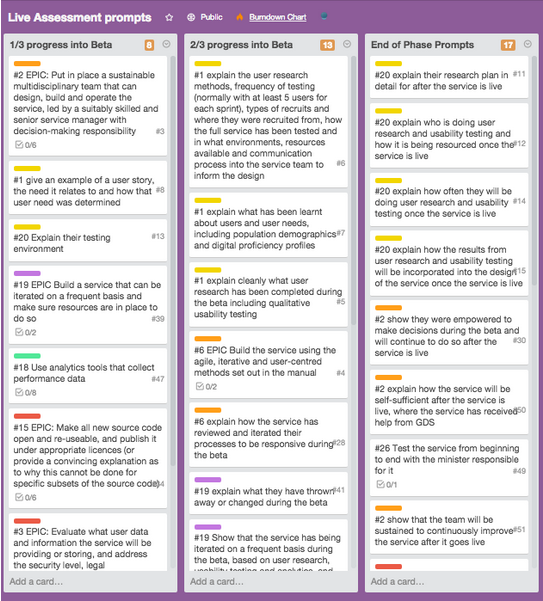

The Parliamentary Questions Tracker team trialled making assessment prompts a part of their product backlog. This meant that prompts were prioritised against other work and were continually addressed by the team. Assessors were notified and regularly reviewed progress with the team.

Although this new approach was very successful, the benefits were outweighed by the amount of time and effort required from the assessment panel which led to major scalability issues if it were to be deployed more widely across MOJ Digital or even GDS.

Moving to a new model with regular assessments

If continuous assessments are too resource-intensive and unable to scale, what is the answer?

Our suggestion is that services should be regularly assessed. This means we put in place a number of smaller assessments during development with prompts at relevant stages:

- Initial prompts focus around the team and the tools in place to develop the service. The aim is to ensure that the team is set up right to deliver well from the start.

- Questions centre on user research, the way the team is working and changes that have been made to the service. The aim is to ensure the service is being iterated to improve the user experience and that the team is working in an agile, user-centred way

- Prompts at the end of the phase focus on what’s next - is there a user research plan and team in place for the next phase of development?

And the result is...

We believe that adopting this regular assessment approach offers important benefits, including:

- giving assessors more power to provide timely guidance and corrections

- helping teams to react quickly and early to feedback

- ensuring failures don’t affect the final assessment outcome

Let’s share

Do you have a different approach or thoughts on what we’re doing? We’re open to further improvements in how we approach service assessment and would love to get your thoughts, inputs and experience in the Comments section below.

3 comments

Comment by Sean Fox posted on

From a 'mobile app for enterprise' business perspective, it is critical that throughout the journey there are assessments and feedback gathering points. Cracking effort and great to see the UK Government embracing digital like this.

@seanryanfox

@CommonTime

Comment by Jack posted on

Thanks for the words of encouragement Sean, we're always trying to find better ways to improve services for citizens at MOJ Digital

Comment by Mark posted on

We are doing something similar (on a DWP project). We have a set of constraints (Non-functional requirements, if you like) including assessment criteria (such as the GDS ones). At each Sprint planning session we check each story against the constraints and add Acceptance Criteria for how that story will be shown to have satisfied that constraint (if relevant). The story is not considered done until we have evidence that it has met those acceptance criteria.

The only non-Sprint bit is that there are some things we have to do to satisfy constraints which depend on things outside the project. We create these as tasks and link them to the appropriate constraints but do not include them in Sprints.

Result is that we are constantly developing a report on how each ot the requirements is being satisfied as an integral part of the development. We can then share it with whichever external assessment team requires it. Also means we can have pretty charts showing at a glance how far along we are in satisfying each constraint.

It is still fairly early days, but it seems to be reasonably light-weight and useful. I guess we will know if it sufficient when we reach our assessment for going to public beta